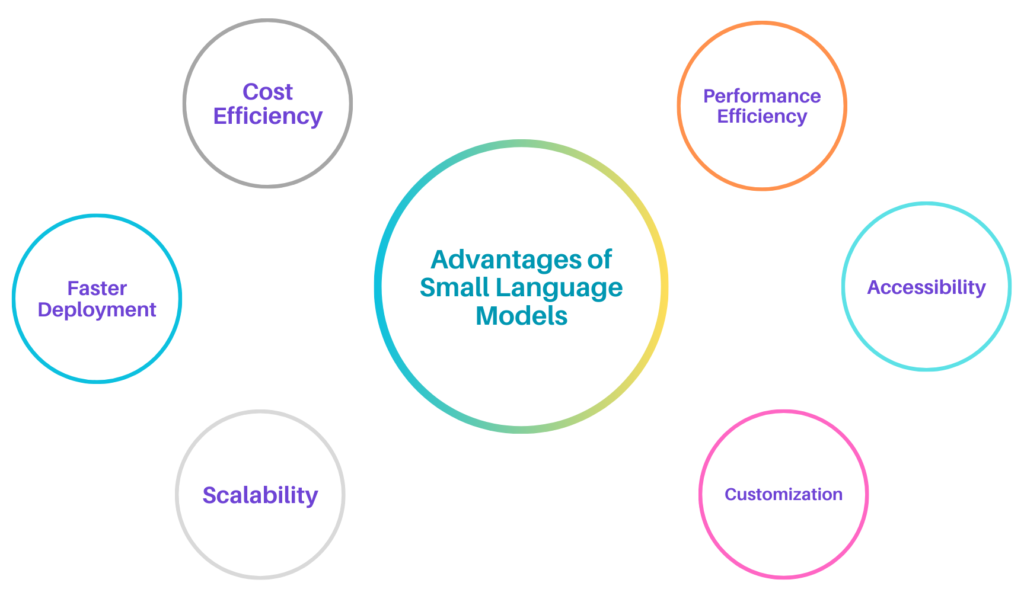

Small language models (SLMs) exhibit a significant development in the AI field. With cost-effectiveness, faster training, accessibility, scalability, and effective performance, SLMs offer an efficient alternative to LLMs.

Artificial Intelligence (AI) has been heralded as a transformative force over the years. It has revolutionized industries by enhancing productivity and driving unprecedented innovation. AI advancements have powered large language models (LLMs) that understand, generate, and manipulate human language. However, LLMs like GPT-3 and GPT-4 require significant computational resources and hefty costs.

This has immensely led to a growing interest in smaller, more affordable AI models – Small Language Models (SLMs). These affordable AI models promise to democratize access to advanced language processing capabilities.

This article will take you through the potential of small language models (SLMs) and their impact on the future of affordable AI. You will also delve into the benefits, challenges, and technological innovations of SLMs, with an emphasis on LLMs vs SLMs. So, let’s get started.

Also Read: ChatGPT vs Gemini: Everything Revealed

From Big to Small: Understanding Small Language Models

While large language models have already left their marks with their impressive capabilities, small language models are poised to become the next frontier in AI. Unlike LLMs, which are trained on 175 billion parameters, SLMs are being designed in a way that offers many of the same capabilities as LLMs but are smaller in size and trained on less amounts of data.

Recently, Microsoft introduced a family of open AI models “Phi-3”, which is reportedly the most capable and cost-effective small language models (SLMs). This AI model outperforms models of the same size and next size up across a variety of benchmarks evaluating language, coding and math capabilities.

Small Language Models (SLMs) vs. Large Language Models (LLMs)

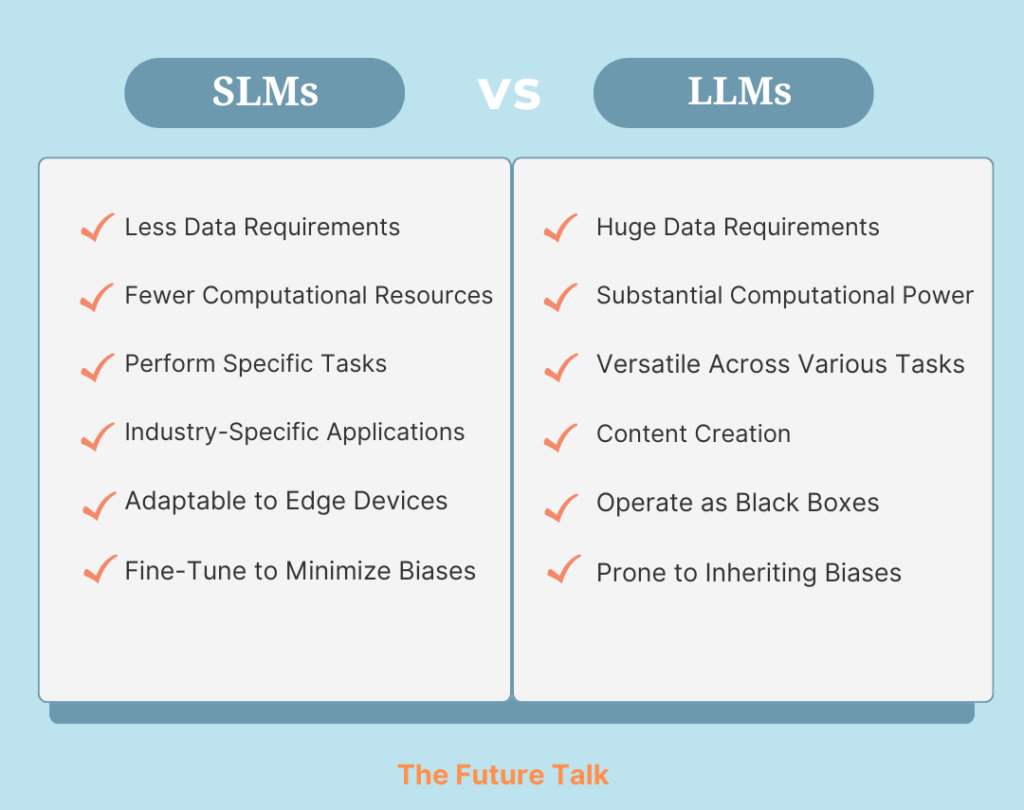

Both LLMs and SLMs exhibit distinct characteristics, advantages, and limitations. Unlike LLMs, small language models are developed with a small number of parameters.

Here are the differences between Small Language Models (SLMs) and Large Language Models (LLMs):

Size: SLMs are smaller than LLMs. Unlike LLMs, which contain billions of parameters, SLMs are built on millions to a few hundred million parameters. For instance, Phi-3-mini is a 3.8B language model.

Cost-Effectiveness: SLMs are more cost-effective as they have lower computational and infrastructure requirements. LLMs are expensive to build and maintain due to high resource requirements.

Efficiency: SLMs are highly efficient for specific tasks. Conversely, LLMs are less efficient as these AI models are large in size but offer broad capabilities.

Training Data and Resources: Training an SLM requires less data and computational resources and can be trained in weeks. LLMs can take months to train and require vast datasets and substantial computational power.

Performance and Accuracy: Unlike LLMs, SLMs have high accuracy for targeted applications. LLMs deliver accuracy and fluency in diverse tasks.

Device Compatibility: While SLMs are compatible with edge devices and local deployment, LLMs typically require high-performance servers and cloud infrastructure.

Performance: SLMs perform well on specialized tasks. LLMs, on the other hand, work well on complex language tasks with high generalization capabilities.

Control: With SLMs, anyone can run models on their own servers, tune them, and control them as needed. LLMs can be unpredictable and operate more like black boxes.

Democratizing AI

Small language models (SMLs) have the potential to democratize AI access. Unlike LLMs, which require substantial computational resources, SLMs can operate on more common and affordable hardware, such as laptops and even smartphones. This easy accessibility enables small businesses, startups, educational institutions, and even individual developers to leverage the potential of AI at a low cost.

Other factors that help democratize AI is the customization of small language models. SLMs can be customized to meet an organization’s specific requirements. This can help them with security and privacy. As SLMs have smaller codebases, they also help in minimizing their vulnerability to malicious attacks.

With features like fewer computational resource requirements, enhanced performance and accuracy, SLMs allow users to innovate and develop AI-powered solutions tailored to their specific needs.

Examples of Small Language Models (SLMs)

Here are some of the examples of small language models:

| Model | Parameter | Developer |

|---|---|---|

| Phi-3-mini | 3.8 billion | Microsoft |

| GPT-2 (small version) | 124 million | OpenAI |

| ALBERT | 12 million | Google Research |

| BERT | 14.5 million | Huawei |

| ELECTRA (small variants) | 14 million | Google Research |

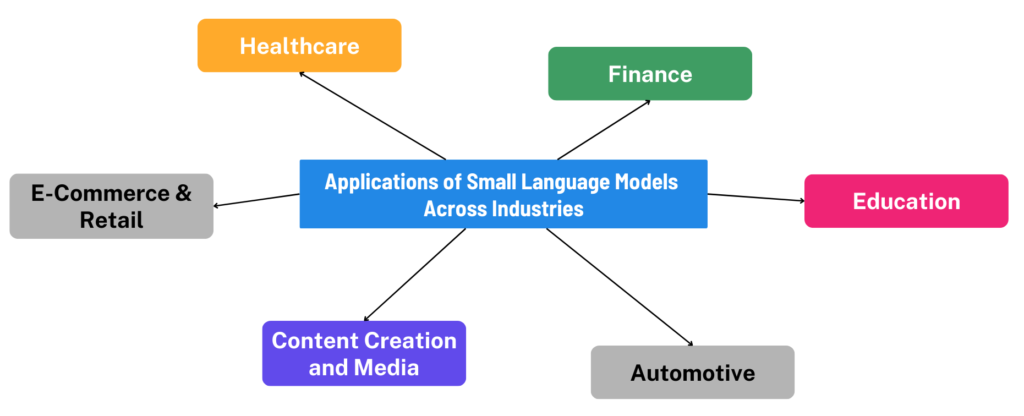

Applications of Small Language Models

As the adoption of AI continues to grow, small language models will improve and their application domains will expand. From healthcare and finance to education and entertainment, these AI models will open numerous opportunities across industries, transforming business functions by providing affordable and efficient AI solutions.

Challenges of Small Language Models (SLMs)

Language models have become the catalyst of artificial intelligence (AI) technology. They are revolutionizing natural language processing (NLP) and creating new avenues for human-machine interaction. As small language models (SLMs) promise a lot, they also present challenges.

- Scalability and Flexibility – Unlike LLMs, which can be scaled up with more computational power and data, SLMs need innovative approaches to improve their performance.

- Accuracy – As SLMs require fewer computational resources than LLMs, researchers must maximize efficiency without compromising too much on the model’s accuracy and capabilities.

- Contextual Understanding – SLMs often lack understanding and maintain context over long passages. This requires techniques that can enhance the model’s ability to generate contextually appropriate responses.

Future Directions of Small Language Models

Emphasizing the proliferation of small language models, Hugging Face CEO Clem Delangue predicts, “In 2024, most companies will realize that smaller, cheaper, more specialized models make more sense for 99% of AI use cases. The current market & usage are fooled by companies sponsoring the cost of training and running big models behind APIs (especially with cloud incentives).”

Companies like Microsoft, Meta, and Google are actively working on the development of smaller AI models with fewer parameters. For instance, Meta’s Llama 3 (8 billion parameters) and Microsoft’s Phi-3-mini (3.8 billion parameters). Several start-ups like Mistral are also offering small language models tailored to specific applications.

With SLMs, enterprises can make the most of AI to drive innovation, enhance operations, and maintain a competitive edge in their respective industries.

Also Read: Is Prompt Engineering The Next Solution For Your Successful Business Projects?

Conclusion

Small language models (SLMs) are becoming significant in the democratization of AI. Unlike their larger, resource-intensive counterparts, SLMs provide a more accessible, cost-effective solution with a high degree of functionality.

As the technology continues to emerge, we at The Future Talk believe that the impact of small language models is likely to grow, shaping the future AI landscape.

Stay tuned to The Future Talk for more such interesting topics. Comment your thoughts and join the conversation.