With SambaNova Cloud, developers can build their own generative AI applications using both Llama 3.1 405B, and the lightning-fast Llama 3.1 70B.

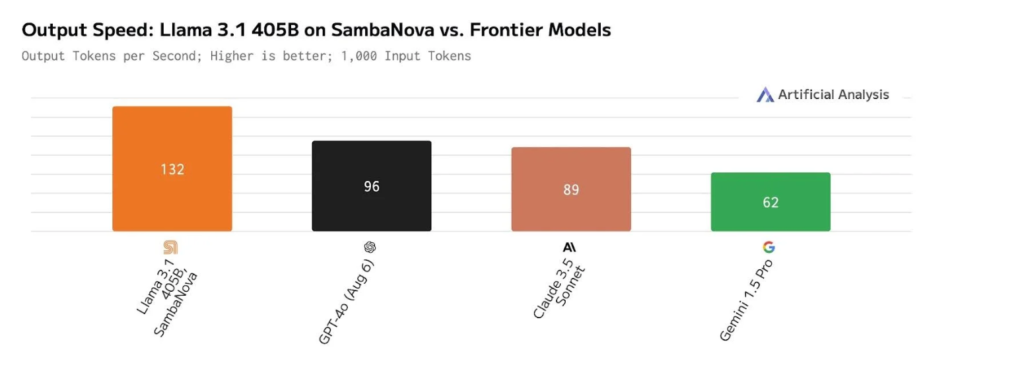

The fastest and most efficient chips and AI model provider SambaNova Systems launched the world’s fastest AI inference service enabled by the speed of its SN40L AI chip, SambaNova Cloud. The platform runs Llama 3.1 70B at 461 tokens per second (t/s) and 405B at 132 t/s at full precision.

SambaNova Systems CEO, Rodrigo Liang said, “SambaNova Cloud is the fastest API service for developers. We deliver world record speed and in full 16-bit precision – all enabled by the world’s fastest AI chip.” He added, “SambaNova Cloud is bringing the most accurate open source models to the vast developer community at speeds they have never experienced before.”

SambaNova Cloud allows developers to log on for free via an API and create their own generative AI applications using both the largest and most capable model, Llama 3.1 405B, and the lightning-fast Llama 3.1 70B.

According to Liang, “Competitors are not offering the 405B model to developers today because of their inefficient chips. Providers running on Nvidia GPUs are reducing the precision of this model, hurting its accuracy, and running it at unusably slow speeds. Only SambaNova is running 405B — the best open-source model created — at full precision and 132 tokens per second.”

Llama 3.1 405B is an extremely large model, which means the cost and complexity of deploying it are high, and the speed at which it is served is slower compared to smaller models. Compared to Nvidia H100s, SambaNova’s SN40L chips minimize the cost and complexity, and because they service the model faster, they also minimize the speed trade-off.

DeepLearning.AI Founder, Dr. Andrew Ng said, “Agentic workflows are delivering excellent results for many applications. Because they need to process a large number of tokens to generate the final result, fast token generation is critical.”

“The best open weights model today is Llama 3.1 405B, and SambaNova is the only provider running this model at 16-bit precision and at over 100 tokens/second. This impressive technical achievement opens up exciting capabilities for developers building using LLMs,” he added.

SambaNova Cloud has been independently benchmarked by Artificial Analysis, which validated its status as the fastest AI platform for Llama 3.1 models. The service is appropriate for agentic workflows and real-time AI applications since it outperforms products from competitors like Google, Anthropic, and OpenAI.

With SambaNova Cloud, developers can develop agentic apps that run at a speed never seen before and execute Llama 3.1 70B models at 461 t/s. SambaNova Cloud is available in three tiers: Free, Developer, and Enterprise.

The Free Tier offers free API access to anyone who logs in. The Developer Tier, which will be available by the end of 2024, allows developers to build models with higher rate limits with Llama 3.1 8B, 70B, and 405B models. The Enterprise Tier provides enterprise customers with the ability to scale with higher rate limits to power production workloads.

Stay Tuned to The Future Talk for more AI news and insights!